Kubernetes Nginx Ingress with Docker Desktop

Overview

I was recently diagnosing an issue at work where a service was configured with multiple differing ingress resources. The team’s reasoning for this was entirely reasonable and, above all, everything was working as expected.

However, once we tried to abandon Azure Dev Spaces and switch to Bridge to Kubernetes (“B2K”) it was quickly discovered that this setup wasn’t going to work straight out of the box - B2K doesn’t support multiple ingresses configured with the same domain name. The envoy proxy reports the following error:

Only unique values for domains are permitted.

Duplicate entry of domain {some-domain.something.com}As a result, I decided the best course of action was to understand the routing that the team had enabled, and work out a more efficient way of handling the routing requirements using a single ingress resource.

To make this as simple as possible, I decided to get a sample service up and running locally so I could verify scenarios locally without having to deploy into a full cluster.

Docker Desktop

I’m using a mac at the moment, but most (if not all) of the commands here will work on Windows too, especially using WSL2 rather than PowerShell.

The Docker Desktop version I have installed is 2.4.0.0 (stable) and is the latest stable version as of the time of writing.

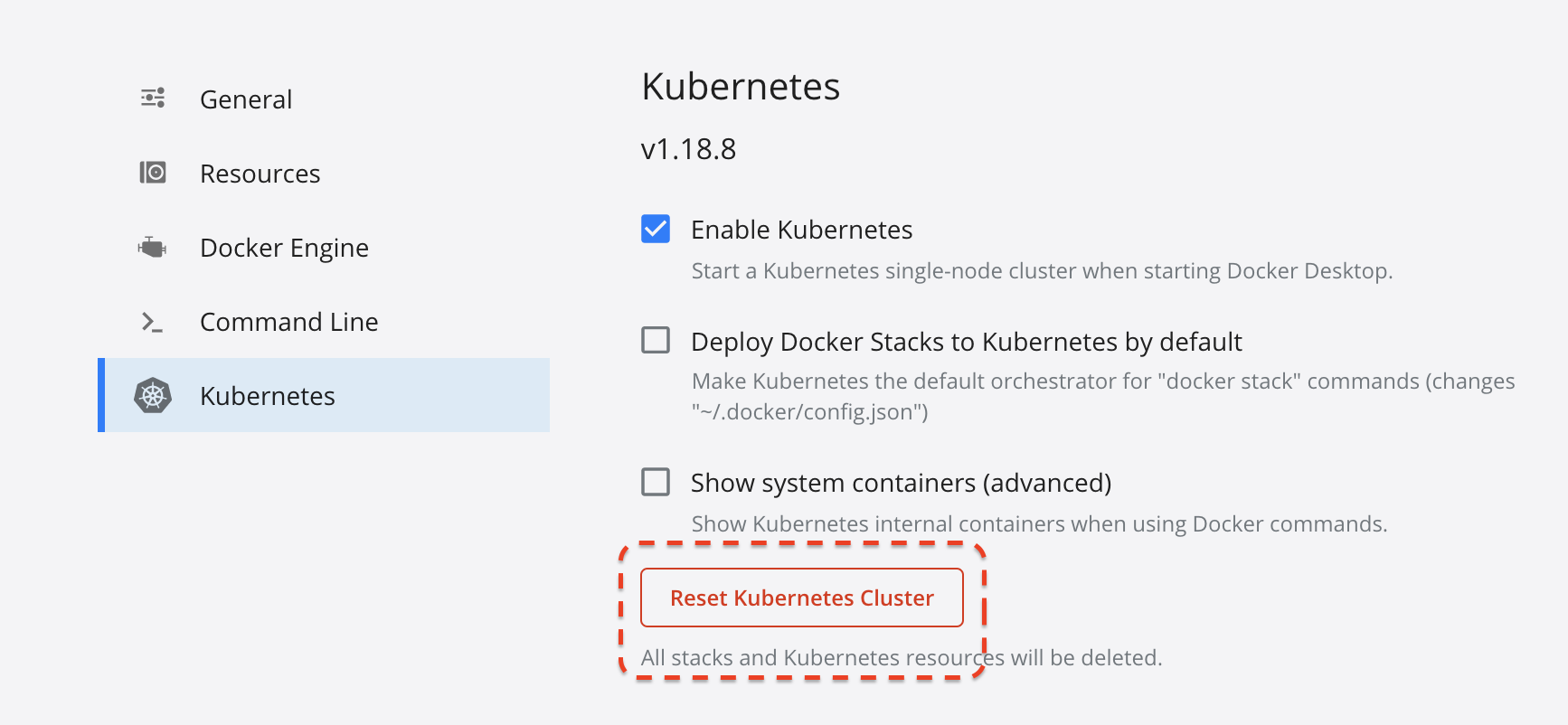

I have the Kubernetes integration enabled already, but I had a version of Linkerd running there which I didn’t want to interfere with what I was doing. To get around this, I just used the Docker admin GUI to “reset the Kubernetes cluster”:

To install Docker Desktop, if you don’t have it installed already, go to https://docs.docker.com/desktop/ and follow the instructions for your OS.

Once installed, ensure that the Kubernetes integration is enabled.

Note: You don’t need to enabled “Show system containers” for any of the following steps to work.

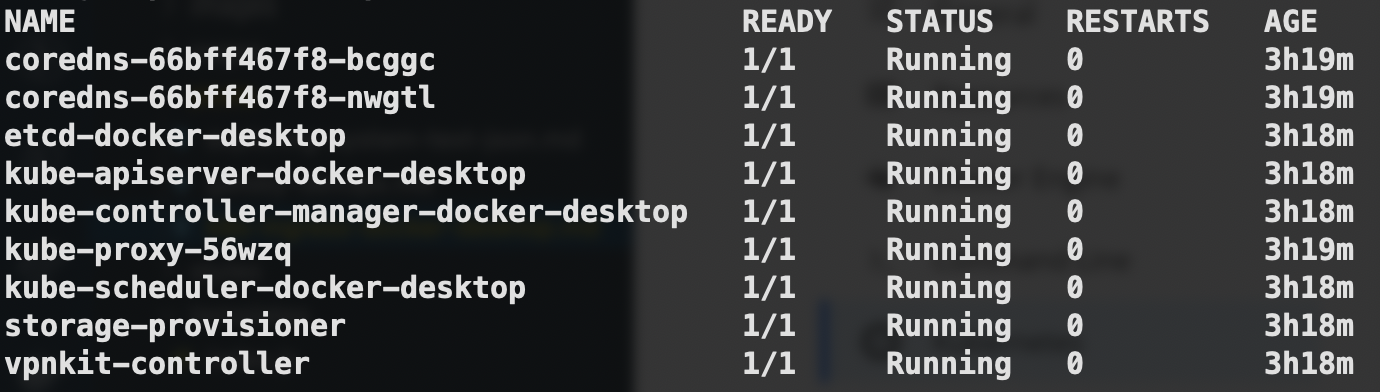

Now you should be able to verify that your cluster is up and running:

Note: I’ve aliased

kubectltok, simply out oflazinessefficiency.

k get po -AThis will show all pods in all namespaces:

Install Nginx

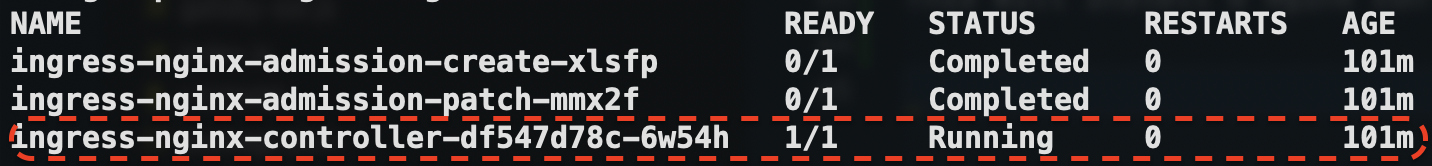

Now we have a simple 1-node cluster running under Docker Desktop, we need to install the Nginx ingress:

Tip: It’s not best practice to just blindly install Kubernetes resources by using yaml files taken straight from the internet. If you’re in any doubt, download the yaml file and save a copy of it locally. That way, you can inspect it and ensure that it’s always consistent when you apply it.

k apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.40.2/deploy/static/provider/cloud/deploy.yamlThis will install a Nginx controller in the ingress-nginx namespace:

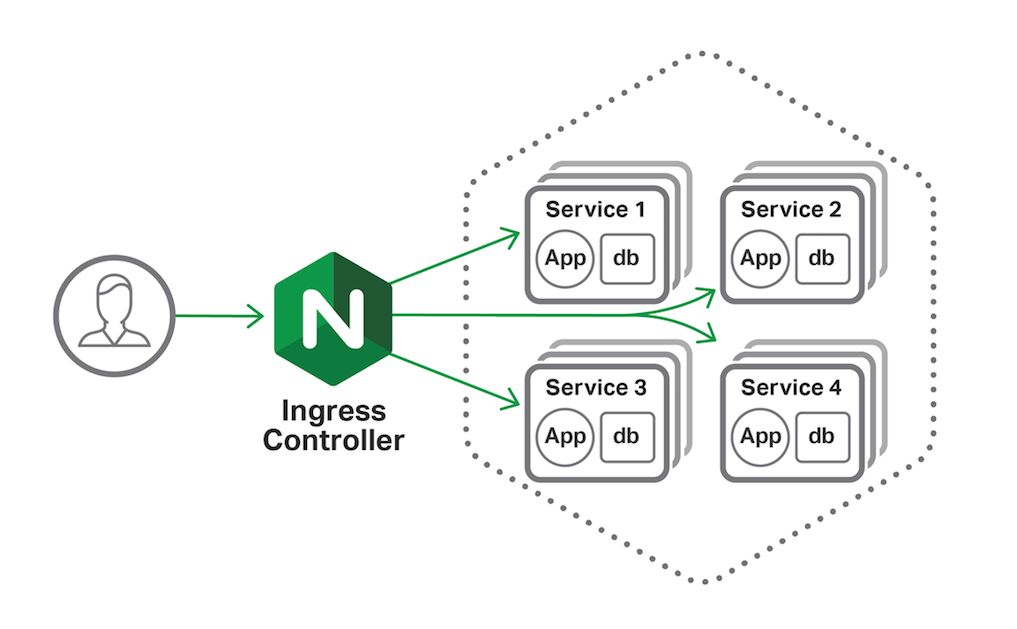

Routing

Now that you have installed the Nginx controller, you need to make sure that any deployments you make use a service of type NodePort rather than the default ClusterIP:

apiVersion: v1

kind: Service

metadata:

name: my-service

namespace: my-namespace

labels:

app: my-app

spec:

type: NodePort

ports:

- port: 80

targetPort: http

protocol: TCP

name: http

selector:

app: my-appDomains

I’ve used a sample domain of chart-example.local in the Helm charts for this repo. In order for this to resolve locally you need to add an entry to your hosts file.

On a Mac, edit /private/etc/hosts. On Windows, edit c:\Windows\System32\Drivers\etc\hosts and add the following line at the end:

chart.example.local 127.0.0.1Now you can run a service on your local machine and make requests to it using the ingress routes you define in your deployment.

The rest of this article describes a really basic .NET Core application to prove that the routing works as expected. .NET Core is absolutely not required - this is just simple example.

Sample Application

The concept for the sample application is a simple one.

- There will be three different API endpoints in the app:

/foo/{guid}will return a newfooobject in JSON/bar/{guid}will return a newbarobject in JSON/will return a 200 OK response and will be used as a liveness and readiness check

The point we’re trying to prove is that API requests to /foo/{guid} resolve correctly to the /foo/* route, and requests to /bar/{guid} resolve correctly to the /bar/* route.

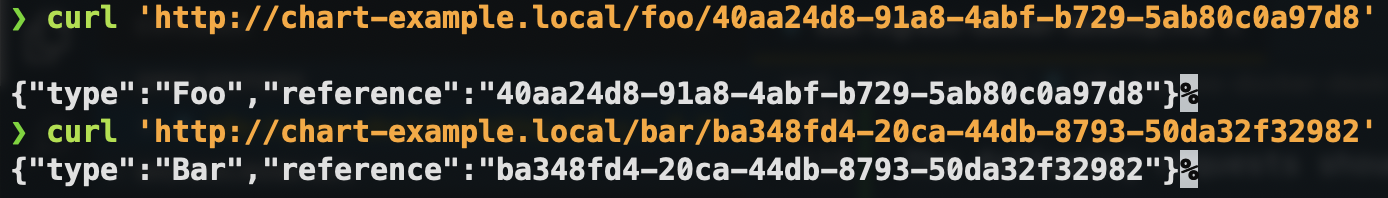

The following requests should return the expected results:

curl 'http://chart-example.local/foo/40aa24d8-91a8-4abf-b729-5ab80c0a97d8'This should return an object matching the following:

{

"type": "Foo",

"reference": "40aa24d8-91a8-4abf-b729-5ab80c0a97d8"

}Similarly, a request to the /bar/* endpoint:

curl 'http://chart-example.local/bar/ba348fd4-20ca-44db-8793-50da32f32982'This should return an object matching the following:

{

"type": "Bar",

"reference": "ba348fd4-20ca-44db-8793-50da32f32982"

}The sample code for this application can be found at https://github.com/michaelrosedev/sample_api.

Dockerfile

The Dockerfile for this sample application is extremely simple - it simply uses the .NET Core SDK to restore dependencies and then build the application, then uses a second stage to copy the build artifacts into an alpine image with the .NET Core runtime.

Note: This image does not follow best practices - it simply takes the shortest path to get a running service. For production scenarios, you don’t want to be building containers that run as root and expose low ports like 80.

Helm

The Helm chart in this repo was generated automatically with mkdir helm && cd helm && helm init sample. I then made the following changes:

- Added a

namespacevalue to thevalues.yamlfile - Added usage of the

namespacevalue in the various Kubernetes resource manifest files to make sure the application is deployed to a specific namespace - Changed the

image.repositorytomikrose/sample(my Docker Hub account) and theimage.versionto1.0.1(the latest version of my sample application) - Changed

service.typetoNodePort(becauseClusterIPwon’t work without a load balancer in front of the cluster) - Enabled the

ingressresource (because that’s the whole point of this exercise) - Added the paths

/fooand/barto the ingress in values.yaml:

ingress:

enabled: true

annotations:

kubernetes.io/ingress.class: nginx

hosts:

- host: chart-example.local

paths:

- /foo

- /barNamespace

All the resources in the service that has the issue (see Overview) are in a dedicated namespace, and I want to reflect the same behaviour here.

The first thing I need to do then is add the desired namespace to my local cluster (sample):

k create ns sampleThis will create a new namespace called sample in the cluster.

Now we can install the Helm chart. Make sure you’re in the ./helm directory, then run the following command:

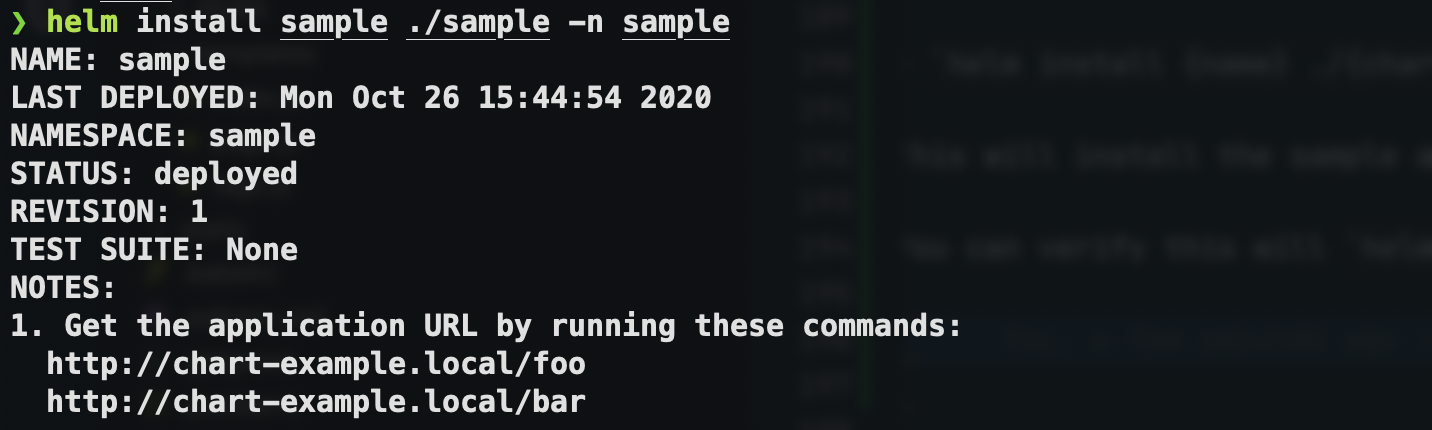

helm install {name} ./{chart-dir} -n {namespace}, i.e.helm install sample ./sample -n sample

This will install the sample application into the sample namespace.

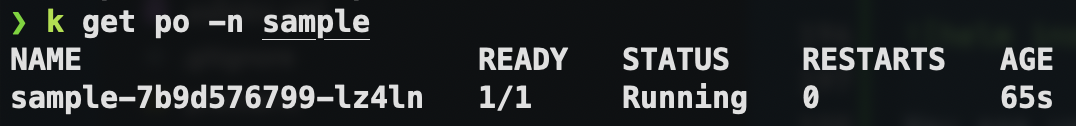

You can then verify that the pod is running:

Tip: If you need to do any troubleshooting of 503 errors, first ensure you have changed your service to use a

service.typeofNodePort. Ask me how I know this…

Now you can make requests to your service and verify that your routes are working as expected:

And that’s it - we now have a working ingress route that we can hit from our local machine.

That means that it should be straightforward to configure and experiment with routing changes without having to resort to deploying into a full cluster - you can speed up your own local feedback loop and keep it all self-contained.

I will now be using this technique to wrap up an existing service and optimise the routing.